The current AI landscape is dominated by a heavy “GPU Tax”—the massive computational cost and energy required to run powerful models—along with growing concerns over the privacy risks inherent in sending sensitive data to the cloud. For businesses and developers, this status quo is becoming unsustainable. This is where Liquid AI enters the conversation. As I will explore in this Liquid AI Review, this technology isn’t just another Large Language Model (LLM) trying to compete on parameter count. It represents a fundamental shift in how artificial intelligence is built and deployed, moving us away from massive server farms and toward a more efficient, agile future.

What is Liquid AI?

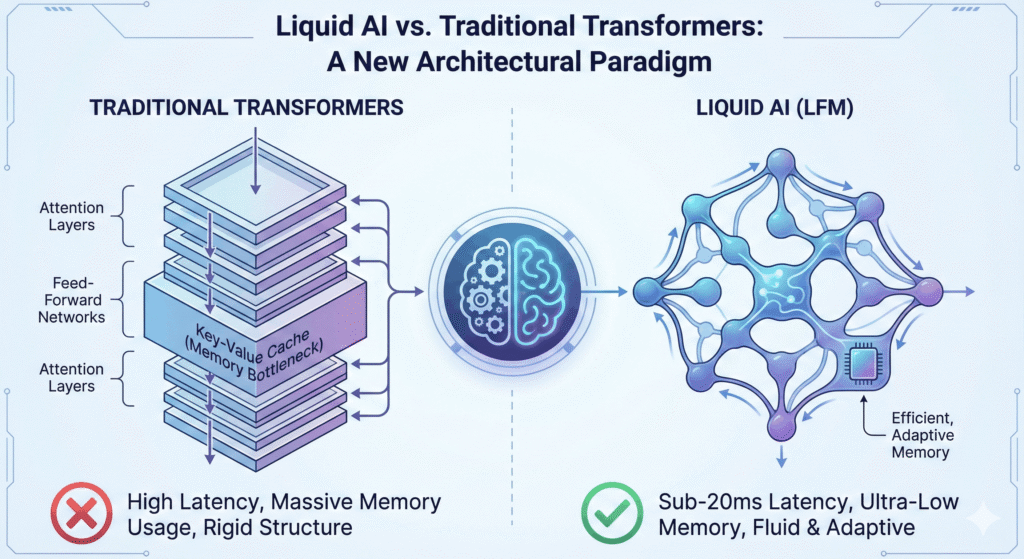

To understand the hype, one must first define Liquid Foundation Models (LFMs). Unlike the Generative Pre-trained Transformers (GPTs) that currently rule the market, Liquid AI utilizes a novel non-transformer architecture rooted in Liquid Neural Networks.

This architecture is inspired by the biological brains of small organisms, designed to be incredibly adaptive. While transformers process data in static chunks, Liquid Neural Networks excel at handling sequential data with fluidity. This allows the system to learn and adapt continuously after training, rather than being “frozen” in time. It is a breakthrough that addresses the rigid nature of traditional deep learning, offering a system that is not only smarter but significantly more adaptable to changing data streams.

Performance & Efficiency

When comparing LFM vs Transformer models, the efficiency gains are startling. Traditional transformers require immense memory bandwidth to store the history of a conversation (the Key-Value cache), which grows linearly with the text length. Liquid’s architecture bypasses this bottleneck, offering unparalleled memory efficiency.

This efficiency translates directly to speed. The models are capable of achieving sub-20ms latency, a feat that is often impossible for standard LLMs without massive hardware acceleration. This enables true hardware optimization, allowing powerful AI to run on a wide variety of devices, from standard CPUs and GPUs to specialized NPUs. Furthermore, when benchmarking SLM (Small Language Models) performance, Liquid’s approach punches far above its weight class, delivering intelligence that rivals much larger models while consuming a fraction of the power.

Solving User Pain Points

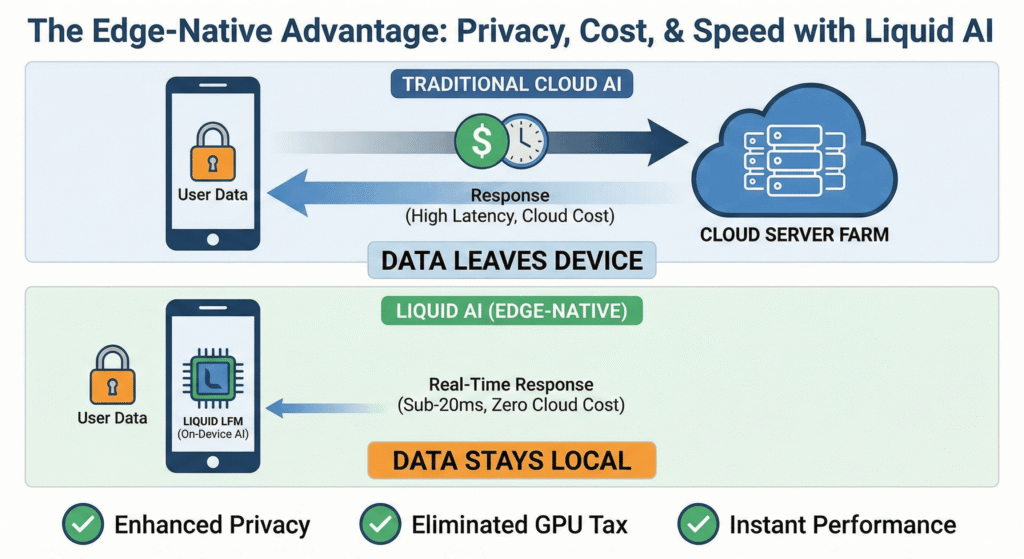

The primary appeal of Liquid AI lies in how it solves critical implementation hurdles. First and foremost is data privacy. By enabling on-device AI and local AI execution, sensitive user data never needs to leave the device. This is a game-changer for healthcare, finance, and enterprise sectors where privacy is non-negotiable.

Secondly, there is the benefit of zero cloud costs. By eliminating the need for constant, expensive cloud API calls, businesses can drastically reduce their operational overhead. Finally, the edge-native design unlocks real-time AI performance in environments where internet connectivity is unreliable or latency is unacceptable. From robotics reacting to physical obstacles to Liquid AI vs Llama 3 comparisons in offline capabilities, the ability to process data locally and instantly opens up new frontiers for autonomous systems and commerce.

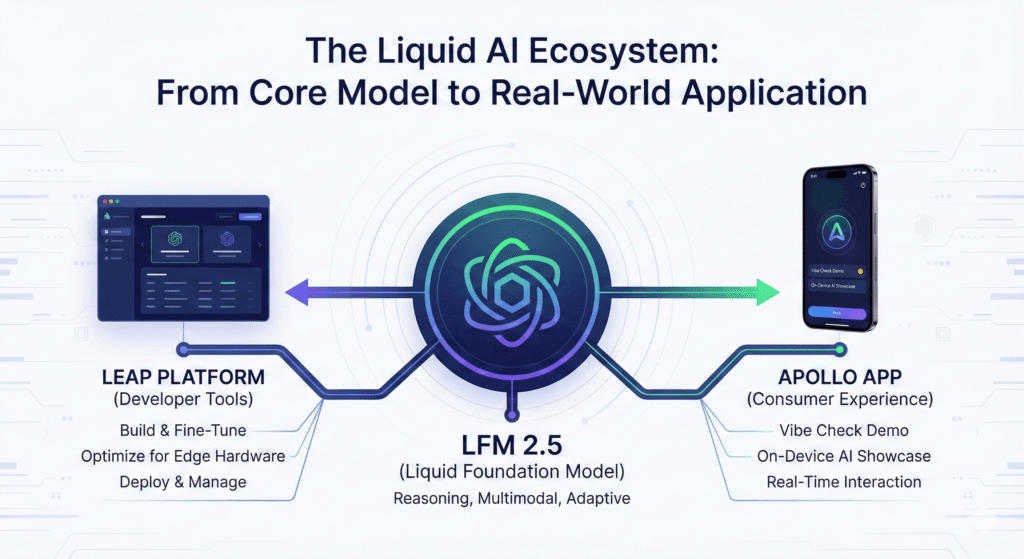

The Ecosystem: LFM 2.5, LEAP & Apollo

Liquid AI is not just a model; it is a growing ecosystem. The company has released LFM 2.5, the latest iteration of its Liquid Foundation Models, which further refines its reasoning and multimodal capabilities.

For developers, the LEAP platform provides the necessary tools to build, fine-tune, and deploy these models specifically for edge environments. It simplifies the complexity of getting a model running on specific hardware. On the consumer side, the Apollo app serves as a mobile “vibe check,” allowing users to experience the fluidity of the technology firsthand on their phones. It acts as a tangible demonstration of how LFM 2.5 and other models in the suite function in a real-world, constrained environment.

Conclusion

So, is the hype justified? In this Liquid AI Review, the final verdict is a resounding yes—specifically for edge cases and privacy-first requirements. Liquid AI is highly credible for organizations looking to escape the cloud processing trap. While massive cloud models will likely remain dominant for general-purpose reasoning, the future is undeniably hybrid. We are moving toward a world where specialized local AI execution works alongside general cloud models, and Liquid AI is leading that charge.